The EU AI Act (Regulation (EU) 2024/1689) came into force on the 1st of August 2024 with the goal of ensuring that Artificial Intelligence (AI) systems are safe, uphold fundamental rights, promote investment, enhance governance, and support a unified EU market.

Most of its provisions will become applicable after a 2-year implementation period, starting from August 2026; however, banned AI systems must be discontinued by February 2025, and regulations for general-purpose AI will come into force in August 2025. During this time, supplementary legislation, standards, and guidance will be published to assist organisations in their compliance efforts.

The AI Act has a significant impact on businesses operating in the EU that develop, offer, or use AI products, services, or systems. It applies to a broad range of entities, including providers that introduce AI systems to the EU market or deploy them within the region. The Act covers AI providers and users within the EU, as well as importers, distributors, and product manufacturers who place AI-integrated products on the market under their own name or trademark.

The Act adopts a risk-based approach, classifying AI systems into 4 (four) categories:

- unacceptable,

- high,

- limited, and

- minimal risk.

AI Systems: Unacceptable risk

Article 5 addresses prohibited AI systems, and applies equally to all operators, regardless of their role or identity, unlike other rules under the AI Act that may vary depending on the operator. It covers various uses, such as AI systems for social scoring by both public and private organisations, systems used to detect emotions in workplaces or schools, and those that create or expand facial recognition databases by collecting images from the internet or CCTVs without targeting specific individuals. It also includes AI systems used to assess or predict the likelihood of someone committing a crime based on profiling or personality traits, as well as systems that categorise people based on biometric data to infer personal characteristics such as race, political views, union memberships, religious beliefs, or sexual orientation. Other banned practices include using techniques that are subliminal, manipulative, or deceptive; taking advantage of people’s vulnerabilities based on age, disability, or specific social or economic situations; and using real-time biometric identification systems in public spaces for law enforcement purposes. Violating Article 5 could result in a fine of up to € 35 million or 7% of the company’s global annual revenue, whichever is higher.

AI Systems: High-risk

AI systems that pose a threat to safety or fundamental rights will be classified as high-risk and further categorised into 2 groups:

- The first category includes AI systems used in products covered by EU safety regulations, such as toys, aviation, automobiles, medical devices, and elevators.

- The second category includes AI systems used in specific sectors, such as education (e.g. automated scoring) and employment (e.g. automated recruitment), which must be registered in an EU database.

High-risk AI systems are permitted but must adhere to strict regulations to manage risks throughout their lifecycle, ensure data quality, and provide clear documentation to demonstrate compliance. Events related to AI systems must be logged to ensure transparency, with records maintained to track high-risk situations and prevent discrimination. The systems must log basic details like usage, data, and personnel involved. The systems must be transparent, with instructions available in a user-friendly format. Human oversight is required, along with ensuring that the systems are accurate, reliable and secure.

High-risk AI systems will need to undergo checks to make sure they meet the requirements of the Act. Before being placed on the market, providers must sign a declaration confirming that the system is compliant and must attach the CE mark to show it meets European standards. After the AI system is on the market, providers must continue monitoring it. This includes reporting any serious issues or failures to the relevant authorities.

Organisations deploying high-risk AI systems, including both public bodies and private entities offering essential services like banks, hospitals, and schools, have a responsibility to ensure the systems are used properly. These responsibilities include assessing potential impacts on fundamental rights before deploying the system, ensuring trained staff provide human oversight, and making sure the data used is relevant to the system’s purpose. They must also suspend the system if national-level risks arise, report serious incidents to the AI provider, and keep system logs. Public authorities must comply with specific registration requirements and data protection laws, such as GDPR. Furthermore, they need to verify that the AI system complies with the Act, keep all necessary documentation, and inform people about the use of high-risk AI.

General-purpose Artificial Intelligence (GPIA)

Rules have been introduced to address the recent developments in general-purpose AI (“GPAI”) models, including large generative AI models. Given the broad range of tasks these systems can perform and their rapidly growing capabilities, it was decided that GPAI systems and their underlying models may need to meet certain transparency requirements. GPAI systems are designed for tasks like image and speech recognition, audio and video generation, pattern detection, and other similar functions. GPAI systems must meet transparency requirements, which include providing technical documentation, adhering to EU copyright laws, and sharing information about the AI training data used.

Stricter rules will be in place for the most advanced foundation models. Providers will need to assess how the models perform, address any potential risks, test for weaknesses, report serious issues to the authorities, and make sure the models are safe and energy efficient.

AI Systems: Limited-risk

AI systems designed to interact with people or create content may not always be classified as high-risk, but they can still pose risks, such as the potential for impersonation or deception. This includes many generative AI systems. Examples of these systems are:

- Chatbots

- Emotion-recognition systems

- Biometric categorization systems

- Systems that generate ‘deepfake’ content

AI Systems: Minimal-risk

Minimal-risk AI systems are those that pose little or no threat to the rights, safety, or well-being of individuals and therefore require minimal regulatory oversight. They generally do not impact fundamental rights, safety, or critical interests. These systems are typically used for simple, common, or assistive purposes where the potential for harm is extremely low.

AI used in video games for instance, falls under this category. These systems will not face strict rules beyond basic product safety standards. However, there is strong encouragement to create guidelines that promote the use of trustworthy AI across the EU.

Article written by Dr. Lara Borg Bugeja, Senior Lawyer and Dr. Suzana Tabone, Junior Lawyer

How can BDO Malta help

We recognise the potential of artificial intelligence (AI) to bring about significant change, but it can only reach its full value when combined with human expertise, creativity and proper risk management.

We recognise that as AI continues to evolve, so do its regulatory challenges. We provide the guidance and expertise businesses need to stay compliant, mitigate risks, and harness AI’s full potential responsibly.

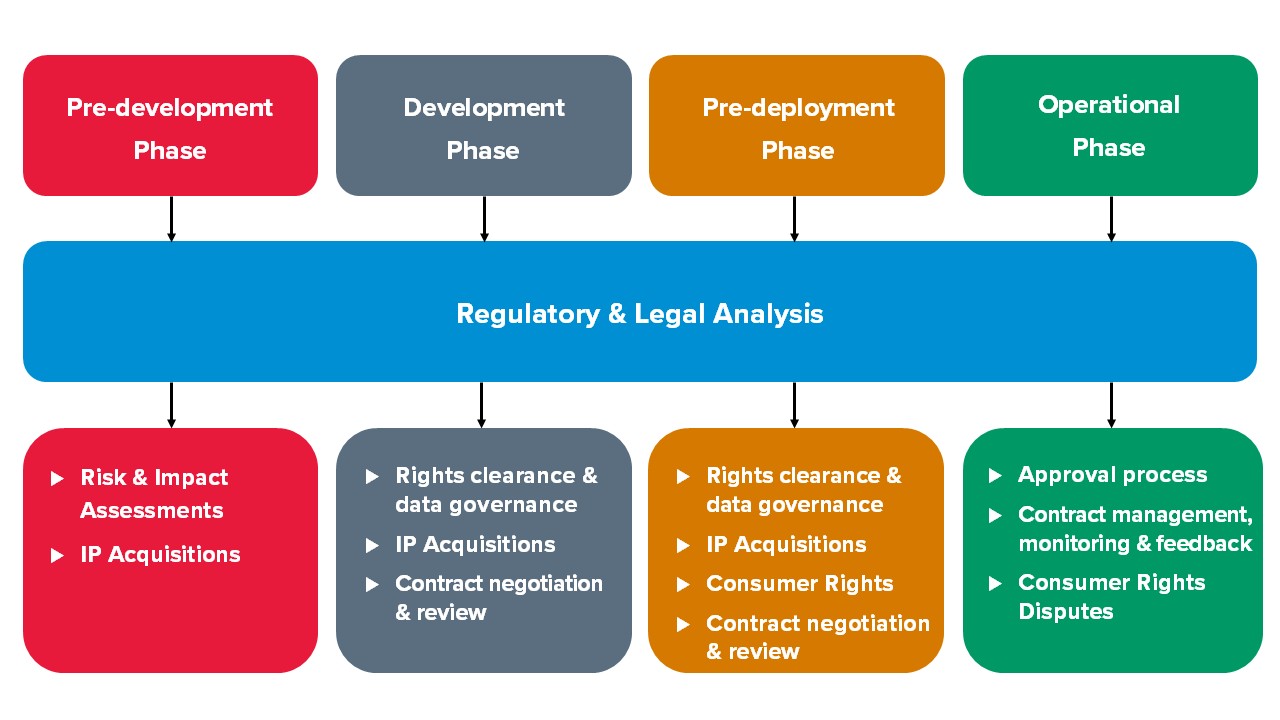

The introduction of the Act establishes a rigorous regulatory framework. Each AI application also comes with its own set of business processes, impacts, and risks. Our legal team offers comprehensive support at every stage of the AI product cycle ensuring analysis and compliance with regulatory requirements, whilst addressing governance and risk concerns that will help you improve your processes and policies.

Legal Services related to the AI product cycle